We only got a taste of the RTX 40 series on September 20th. So far, we know most of the specs of the 4080 and 4090 graphics cards. There’s no doubt they trump the RTX 30 series, but by how much? Current availability is limited, and we’re yet to see extensive user feedback as RTX 4080 sales commenced on November 16, but we know enough.

This 4080 vs 3080 comparison will explain how much better Ada Lovelace is compared to Ampere, what it means to have DLSS 3 capabilities, and what ray tracing games will look like in the foreseeable future. I’ll also look at the RTX 4080’s in-game performance for what games we have so far. So, let’s dive in.

4080 vs 3080 – Quick Comparison

| RTX 4080 | Specs | RTX 3080 |

|---|---|---|

| AD103-300-A1 | GPU Variant | GA102-200-KD-A1*1 GA102-202-KD-A1 (LHR)*1 GA102-220-A1 (12 GB)*2 |

| PCIe 4.0 x16 | Interface | PCIe 4.0 x16 |

| 9,728 | CUDA Cores | 8,704*1 8,960*2 |

| 304 | Tensor Cores | 2721 2802 |

| 304 | TMUs | 2721 2802 |

| 76 | RT Cores | 68*1 70*2 |

| 2,210 MHz | Base Clock (Founders Edition) | 1,440 MHz*1 1,260 MHz*2 |

| 2,505 MHz | Boost Clock (Founders Edition) | 1,710 MHz |

| 16 GB GDDR6X | Memory | 10 GB GDDR6X 12 GB GDDR6X |

| 1,437 MHz (22.4 Gbps Effective) | Memory Speed | 1,188 MHz (19 Gbps effective) |

| 716.8 GB/s | Bandwidth | 760 GB/s*1912 GB/s*2 |

| 256-bit | Memory Bus | 320-bit*1384-bit*2 |

| 320 W | TDP (Founders Edition) | 320 W*1350 W*2 |

| 750 W | Required PSU (Founders Edition) | 750 W |

| 90℃ (194℉) | Maximum Temperature | 93℃ (199.4℉) |

| N/A | Highest recorded Temperature (Founders Edition) | 79℃ (174.2℉)*1 82℃ (179.6℉)*2 |

| 1x HDMI 2.1 3x DisplayPort 1.4a | Outputs (Founders Edition) | 1x HDMI 2.1 3x DisplayPort 1.4a |

| 1x 16-pin | Power Connectors (Founders Edition) | 1x 12-pin |

*1 – 10 GB version

*2 – 12 GB version

Nvidia GeForce RTX 4080

The Nvidia GeForce RTX 4080 was announced on September 20th, 2022. Sales started on November 16th. Nvidia claims the RTX 40 series has 2-4x the power of the previous generation. This enthusiast-class card has 9,728 CUDA Cores and is paired with a 16 GB VRAM delivering an effective speed of 22.4 Gbps. A 12 GB version was also announced but canceled soon after.

While the Ampere generation had what I consider relatively low clock speeds, the RTX 4080 features speeds almost double that of its predecessors. Its memory clock is also higher than the 3080’s, but the 4080’s bandwidth is lower. Regardless, it delivers a higher effective speed, and there’s no doubt that the 4080’s RAM is on par with the rest of its configuration.

Pros:

- Better performance

- Larger VRAM

- Lower TDP

- Higher clock speeds

Cons:

- 50-70% higher MSRP

- Size

Nvidia GeForce RTX 3080

The Nvidia GeForce RTX 3080 10 GB version was released in September 2020. A 3080 LHR (low hash rate) version started shipping in May 2021 as part of Nvidia’s bid to rid us of GPU crypto mining. In January 2022, Nvidia released the 12 GB version based on a slightly different GPU that featured 8,960 CUDA Cores, as opposed to the 8,704 present in the 10 GB version.

The RTX 3080 12GB also features more TMUs, RT, and Tensor Cores, a lower base clock, and larger bandwidth than the 10 GB version. These enhancements were accompanied by a higher price tag, of course.

Pros:

- Lower MSRP

- Higher bandwidth

- Solid performance

Cons:

- Smaller VRAM

- Fewer cores

Nvidia

Nvidia’s 30th anniversary will be achieved in 2023. Nvidia gave us the first-ever GPU in 1999. Since then, we’ve seen ever-better gaming possibilities with every GPU generation, culminating with ADA Lovelace. Nvidia is now the power behind data centers, AI development projects, academic research, and consumer-grade GPUs, such as RTX cards, which are somewhat of a byproduct nowadays.

4080 vs 3080 – Key Specifications

Architecture

Ada Lovelace

Nvidia’s newest GPU microarchitecture, Ada Lovelace, is hard to compete with. Ada Lovelace is the latest in a long line of exceptional microarchitectures from the manufacturer. We’re told it was designed to provide revolutionary performance for ray tracing and AI-based neural graphics.

It features fourth-generation Tensor Cores that increase throughput up to 5x. It also features third-generation RT Cores that have double the ray tracing performance of Ampere. Nvidia introduced Shader Execution Reordering technology that “dynamically reorganizes” previously inefficient workloads, tripling ray tracing shader performance and in-game performance by up to 25%.

Ampere

Ampere was also a generational leap compared to its predecessor, Turing. It improved on Turing’s first-gen RT Cores, second-gen Tensor Cores, and many other valuable features. It was designed for much more than gaming and is the backbone of many research and development projects around the world.

Winner: RTX 4080

Also Read: The difference between RTX and GTX series cards

Design and Build

The RTX 4080 reference card is a triple-slot card. About half of the already announced third-party variants are quad-slot cards or almost quad-slot. These include all of the ASUS variants and two of the four MSI variants. Their sizes range from 3.15-slot to 3.65-slot. I guess that’s how we’re labeling graphics cards from now on.

Some are exceptionally heavy, weighing up to 5 KG (11 lbs). That’s not including water-cooled variants with their additional radiators, just air-cooled. In comparison, the RTX 3080 Founders Edition is a dual-slot card. Many of its third-party variants are triple-slot, and only four variants are quad-slot cards.

Winner: RTX 4080

Core Configuration

GPUs are parallel processors with thousands of cores. Nvidia calls its cores CUDA Cores. As expected, Nvidia upped the core count in the RTX 4080 when compared to the 3080. The RTX 4080 has 9,728, the RTX 3080 10 GB has 8,704, and the 12 GB version has 8,960 CUDA cores.

GPU cores are often counted as shaders because they require shaders (generic programming languages) to operate and create aspects such as lights, shadows, and color whenever a 3D scene is rendered. Shaders arguably play the most important role because your GPU wouldn’t be able to do anything without them.

Shaders

Shaders utilize GPU cores to create the aspects I mentioned and much more. DirectX is the best example out there. It allows games to use your GPU hardware. Both the 4080 and 3080 use the same shader languages except for the CUDA shader version. The RTX 4080 uses CUDA 8.9, while the 3080 uses 8.6 in both versions.

| RTX 4080 | Shader Language | RTX 3080 |

|---|---|---|

| 12 Ultimate (12_2) | DirectX | 12 Ultimate (12_2) |

| 4.6 | OpenGL | 4.6 |

| 3.0 | OpenCL | 3.0 |

| 1.3 | Vulkan | 1.3 |

| 8.9 | CUDA | 8.6 |

| 6.6 | Shader Model | 6.6 |

RT cores

One of the key selling points of all three RTX series is their RT Cores. They’re specifically designed to handle real-time ray tracing. Ray tracing in 3D graphics simulates how light acts in the real world. It was previously only used in CGI movies to blend rendered images with real-life scenes. Nvidia changed that in 2018 with Turing (microarchitecture) and its first-gen RT Cores.

The RTX 4080 features third-gen RT cores that offer up to 2x the ray tracing performance of the previous generation (i.e., the 4080 should be two times faster than the 3080). The 4080 has more RT Cores than either of the 3080 versions. It has 76 third-gen RT Cores, while the 3080 10 GB has 68 second-gen cores. Even the 3080 12 GB version falls short with 70.

TMUs

Ray tracing falls into the “nice-to-have” basket because not that many games feature it yet. In contrast, textures are crucial in 3D images and environments. Every surface is covered by a texture. Textures are handled by Texture Mapping Units (TMUs). The RTX 4080 has 304 TMUs. The RTX 3080 10 GB has 272, and the 12 GB version has 280 TMUs.

Tensor Cores

Tensor Cores might seem like another “nice-to-have,” but they play a significant role in the RTX 4080. Tensor Cores are responsible for DLSS (Deep Learning Super Sampling), a technology that significantly improves performance. They did the same in the RTX 3080, but those were third-gen Tensor Cores.

Fourth-generation Tensor Cores offer up to five times the throughput of the previous generation, greatly boosting performance when DLSS is turned on.

Winner: RTX 4080

Clock Speeds & Overclocking

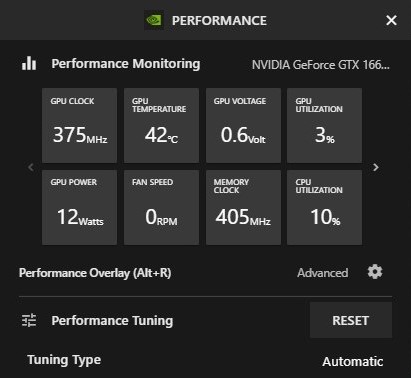

Clock speeds are a measurement of a component’s operating speed (frequency). GPUs, CPUs, RAM, and VRAM have clock speeds. Today’s GPUs feature dynamic clock speeds ranging between the base clock (lowest operating frequency under load) and boost clock (highest speed). But that’s not entirely true. If you’re playing an older game with lower requirements, you won’t even be using the base clock.

On the other hand, if you’re playing a performance-heavy game, you’ll see your card jump beyond the official boost clock without any issues, that is, until it overheats. The RTX 30 series uses low clock speeds, in my opinion. Nvidia has definitely pumped up its clock speeds in the RTX 40 series.

The RTX 3080 10 GB version has a base clock of 1,440 MHz and a boost clock of 1,710 MHz. The 12 GB version has a lower base clock of 1,260 MHz (because it had more cores) and the same boost clock. The RTX 4080 has a base clock speed of 2,210 MHz and a boost clock of 2,505 MHz.

Many third-party variants come factory overclocked. We know the highest boost clock of a 3080 10 GB variant is 1,905 MHz. The highest for a 12 GB variant is 1,890 MHz. We don’t have the boost clocks for 11 of the 13 RTX 4080 variants announced to date, but the two listed have boost clocks of 2,656 MHz.

Back when clock speeds were static, we had to resort to overclocking our cards (raising clock speeds) to get better performance. There aren’t many titles out there (if any) the RTX 3080 cards, let alone the 4080, can’t handle as they are. The built-in dynamic boost does a great job of balancing power and performance.

You can overclock your GPU using Nvidia Experience or third-party software such as MSI Afterburner – guide here. There is more software out there, but most people choose MSI. GIGABYTE’s Auros software (for example) crashed my PC before ever starting up.

| RTX 4080 (So far) | Boost Clock |

|---|---|

| Colorful iGame RTX 4080 Neptune OC*3 | 2,656 MHz |

| Colorful iGame RTX 4080 Vulcan OC*1 | 2,656 MHz |

| Gainward RTX 4080 Phantom GS | 2,640 MHz |

| Palit RTX 4080 GameRock OC | 2,640 MHz |

| ASUS ROG STRIX RTX 4080 GAMING OC | 2625 MHz |

| RTX 3080 12 GB | Boost Clock |

| MSI RTX 3080 SUPRIM X LHR*1 | 1,890 MHz |

| ASUS ROG STRIX RTX 3080 GAMING OC*1 | 1,860 MHz |

| ASUS ROG STRIX RTX 3080 OC EVA Edition*1 | 1,860 MHz |

| GIGABYTE AORUS RTX 3080 MASTER*2 | 1,830 MHz |

| GIGABYTE AORUS RTX 3080 XTREME WATERFORCE*3, 4 | 1,830 MHz |

| RTX 3080 10 GB | Boost Clock |

| ASUS ROG STRIX RTX 3080 GAMING OC*4 | 1,905 MHz |

| ASUS ROG STRIX RTX 3080 GUNDAM*4 | 1,905 MHz |

| ASUS ROG STRIX RTX 3080 V2 GAMING OC*4 | 1,905 MHz |

| Colorful iGame RTX 3080 Vulcan X OC*1 | 1,905 MHz |

| GIGABYTE AORUS RTX 3080 XTREME*2 | 1,905 MHz |

*1 – Triple-slot

*2 – Quad-slot

*3 – Water cooled

*4 – Dual-slot

Winner: RTX 4080

Also Read: Learn how to undervolt your CPU and GPU

VRAM & Memory Specs

Initially, the RTX 4080 was announced in two GDDR6X versions, with 12 GB and 16 GB. The 12 GB version has since been canceled, so we’re left with the 16 GB GDDR6X version to work with. This VRAM is clocked at 1,437 MHz and connected using a 256-bit memory BUS. It delivers an effective speed of 22.4 Gbps and a bandwidth of 716.8 GB/s.

The RTX 3080 was initially released paired with a 10 GB GDDR6X VRAM connected using a 320-bit BUS. It’s clocked at 1,188 MHz (a surprisingly low frequency), delivering an effective speed of 19 Gbps and a 760.0 GB/s bandwidth.

The later 3080 version used a 384-bit BUS to connect its 12 GB VRAM. It was also clocked at 1,188 MHz delivering an effective 19 Gbps and higher bandwidth of 912 GB/s.

Winner: RTX 4080

Also Read: The key differences between VRAM and RAM

Performance

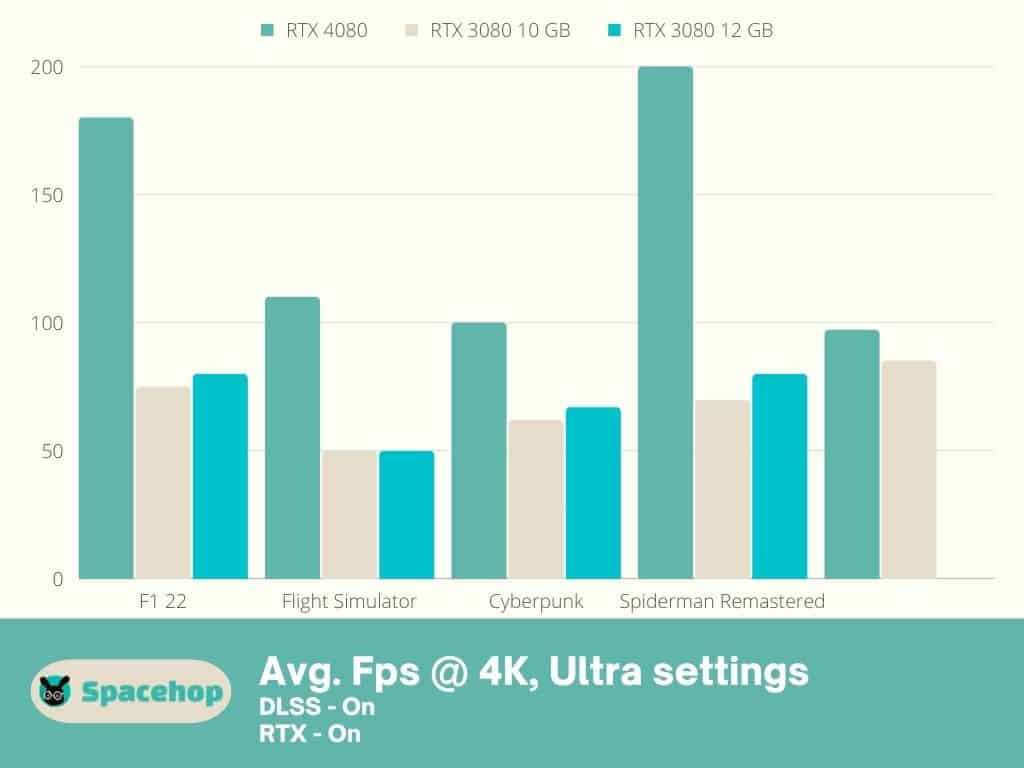

This is where it gets tricky. We don’t have much user feedback yet, and Nvidia hasn’t provided many benchmark tests to compare. From what we do know, DLSS makes all the difference here. I’ve graphed performance info (released by Nvidia) for four games using the RTX 4080, all at 4K ultra settings with DLSS and RTX on.

So I compared the same games for both versions of the RTX 3080 using similar PC builds. The RTX 4080 beats both 3080 versions without a doubt. I’m anxious to see how the RTX 40 will compare when we get some EFps user results in a couple of months.

Winner: RTX 4080

Also Read: Difference Between 4K and 1080p Explained

Connectivity

The Founders Editions of all three versions of the two cards support HDMI 2.1 and 1.4a DisplayPorts. Many third-party variants of the 3080 have altered connection options, such as two additional HDMI ports (six ports total).

| RTX 4080 Output Type | Supported Resolution | RTX 3080 Output Type | Supported Resolution |

|---|---|---|---|

| HDMI 2.1 | 4K at 120Hz, 8K at 60Hz | HDMI 2.1 | 4K at 120Hz, 8K at 60Hz |

| DisplayPort 1.4a | 4K at 120Hz, 8K at 60Hz | DisplayPort 1.4a | 4K at 120Hz, 8K at 60Hz |

Winner: Draw

TDP

TDP refers to how much heat a component is allowed to produce (Thermal Design Point) and how much power it’s allowed to draw from your PSU (Thermal Design Power). Heat isn’t important unless you’re using your card at 100% for long periods. The RTX 3080 10GB hit 79℃ (174.2°F), and the 12GB version hit 82℃ (179.6°F). Both are below the 93℃ (199.4°F) limit set by Nvidia.

We’re yet to get any info about the RTX 4080’s 4080’s heat output, but I’m guessing it will be a bit lower because Nvidia has lowered the heat limit to 90℃ (194°F) for the RTX 40 series. Nevertheless, we wouldn’t have cooling configurations that take up 3.65-slot if the 4080 didn’t need to be cooled much.

Nvidia states that the RTX 3080 10GB and 12GB versions require a 750W PSU to power their 320W (10GB) and 350W (12GB) TDPs. The RTX 4080 also has a 320W TDP and requires the same 750W PSU.

The RTX 30 series introduced a 12-pin PSU connector. As if that was not enough, the RTX 4080 uses a 16-pin PSU connector (adapter included). I’ve seen users report the RTX 4090’s 16-pin connectors catching fire and melting, essentially destroying the cards. Something to consider before purchasing an RTX 40 card.

Winner: RTX 3080

Pricing & Availability

Have you heard of Moore’s Law? It basically states that computer chips double in performance while prices reduce by half every couple of years. Well, Jensen (Founder & CEO of Nvidia) doesn’t seem to like this idea very much and recently said that “Moore’s Law is Dead.” Well, as it turns out, that law might not be dead, at least in the case of the RTX 4080.

The RTX 4080 was announced with an MSRP of $1,199.

When it was yet to hit the shelves, I anticipated much higher price tags for all 4080 variants. Customers were not satisfied with the RTX 4080’s price when considering performance per dollar. Rumors say that Nvidia is considering a price cut for the 4080, and listings show that its prices are hovering right around MSRP, less even in some cases.

The 4080 Founders Edition ranges from 15% above to 50% above MSRP. One of the best 4080 variants, the ASUS ROG Strix is priced just 29% above MSRP and is currently on sale, not even two months after release. This ZOTAC Gaming variant is priced at MSRP. Those of you who remember how it was with prices and availability when the RTX 30 series came out, know what a big deal this is!

The RTX 3080 10 GB was priced at $699 upon release, but I don’t think anyone actually paid that “little” back then. The 12GB version was priced slightly higher at $799. We all know what happened with GPU prices over the past two years. Thankfully, that’s behind us.

The No. 1 on our RTX 3080 10 GB list, the ASUS ROG Strix variant (White OC Edition) is also the most expensive available, priced at 114% above MSRP. Wow! ZOTAC Gaming variants seem to be available at the most reasonable prices. This ZOTAC Gaming variant is priced at 11% above MSRP, though it’s not an OC card.

One of the best RTX 3080 12 GB variants from our list, the GIGABYTE Auros variant is priced at 87% above MSRP. The cheapest deal currently available is for an MSI Gaming variant, priced 1% below MSRP.

*Prices might be different at the time of reading.

Conclusion

There’s no doubt which card wins in this 4080 vs 3080 comparison. From what we know so far, the RTX 4080 blows the 3080 away in every aspect, especially performance. Sadly, not all of us have the means to purchase an enthusiast-class GPU, and I predict that the MSRP will be trampled on and exceeded. With the official launch of the RTX 4080 just happening a few days ago on November 16th, we’ll have the answer soon enough.

Also Read: 4090 vs 3090: How much better is the new Nvidia RTX 4090?