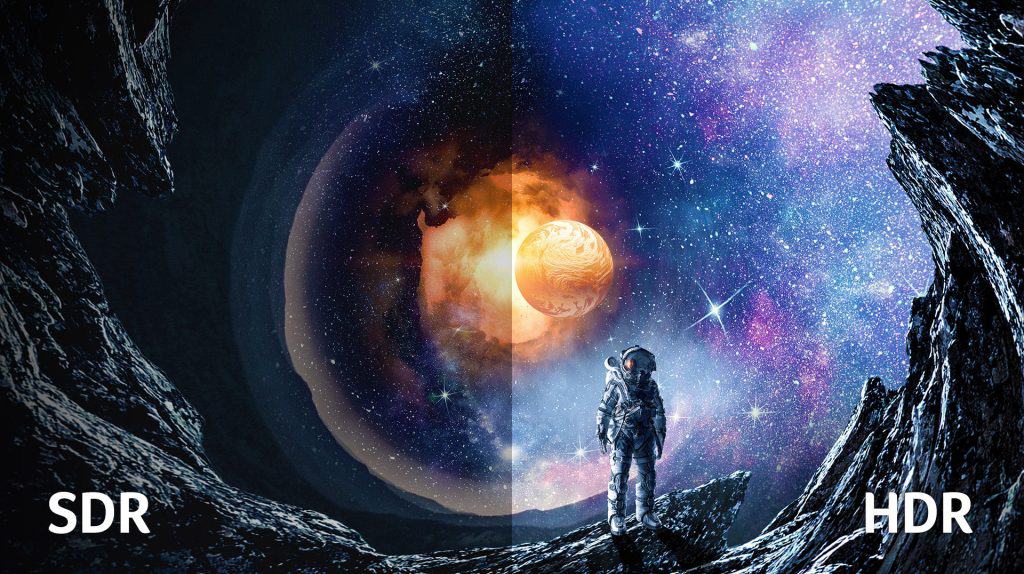

Are you buying a TV? Can’t decide what to choose? Don’t know what SDR or HDR labels mean? You need to compare SDR vs HDR features before you choose. Will the colors and shadows look better on your screen depending on whether it’s SDR or HDR?

Find out why it is important to understand SDR vs HDR and what other features you need to pay attention to before buying your new TV.

Let’s compare the two and see the advantages and disadvantages of SDR and HDR, check out some of the most used HDR standards, and find out if the improved features are worth the price.

SDR vs HDR – Introduction

So, first of all, let’s answer what is dynamic range? It’s a ratio between the brightest and the darkest point of the image (pure white and pure black).

SDR is a standard dynamic range used for videos and cinemas. It allows maximum luminance of 100 cd/m2 (candelas per square meter), minimum of 0.1 cd/m2, Rec.709 / sRGB color gamut, and uses a conventional gamma curve signal.

On the other hand, the HDR technique is not so straightforward. It relies on HDR metadata (in most cases), which is basically additional information sent to the displayer on how to display content correctly. That means that both shadows and highlights will be increased.

The result: a more vivid and realistic visual experience.

And yes, you got it right; there are standards that a TV or other device must meet to be labeled as an HDR device, HDR10, HDR10+, HLG, and Dolby Vision. Because otherwise, you won’t be able to see the HDR content the way you are supposed to.

And now you’re probably wondering why do you need it? Well, it’s mostly because human vision is way more perfect than any display. We can see various shades, colors, and brightness range within one moment, while our devices have issues with that transit from bright to dark.

Standard Dynamic Range (SDR)

SDR has been with us for a long time, ever since black-and-white TVs appeared. Or, more precisely, from 1934, when the first CRT (cathode-ray tube) TV was manufactured.

The first color TV (1954) was also using SDR, and you probably know that with the older TVs, the only way to improve the quality of your display is to set brightness, colors, and contrast.

These three are the main parameters that SDR uses for light intensity, which impacts our experience as consumers of visual content. But sometimes, it’s just not enough.

Its maximum luminance goes up to 100cd/m2, and the minimum is limited to 0.1 cd/m2. The contrast ratio is 1,200:1, the color depth is 8 bits, and it can display up to 256 shades of primary colors.

Human vision can perceive a much broader range of luminance and color gamut levels than SDR, which means we can simultaneously see details in shadows and highlights.

That allows us to see the sunset and everything in our visual range in authentic colors with all the details, but if we take a camera to shoot it, the image would probably be less impressive. And that’s what nudged the HDR technology innovation.

But, when talking about SDR TVs, we mustn’t forget two things:

- The new generation SDR TVs have pretty good performances, especially those with 4K resolution.

- The most sold TV on Amazon is the Smart TV TCL 32 (TCL 32-inch 3-Series 720p Roku Smart TV – 32S335, 2021 Model), which scored a high 4.6 out of 5 stars by more than 68,000 Amazon buyers. And no, it’s not an HDR TV.

High Dynamic Range (HDR)

Though HDR emerged in 2014, the technique is not utterly new, at least not in photography. Gustave Le Gray, a French photographer, started combining two negatives in his Ocean series of photos to capture both the sun and the reflection in the water.

And that’s basically what HDR photography is all about, merging several images to create a final photo that will encompass different exposures in one shot.

The situation is a bit more complex when it comes to video content. The HDR technique expands SDR’s luminance limitations, meaning white can be lighter, and black can be even darker. In other words, the dynamic range is broader.

HDR is not only about increasing the number of pixels but making them better. It lets you see more details when the video screen is very bright or dark, which is not the case with SDR.

Now, not all devices can display HDR video, especially the older ones. It’s possible only for TVs, monitors, or projectors that comply with HDR10, HDR10+, HLG, or Dolby Vision standards.

HDR10 open standard

HDR10 is the most common HDR format, which emerged in 2015, and today it’s used by some of the most famous streaming services, such as Netflix, YouTube, and Amazon.

But it’s not just the streaming services; HDR10 is a supported format by most big TV manufacturers, such as LG, Samsung, Panasonic, Sony, or Hisense.

If you are not sure whether your TV is SDR or HDR, the best way to check it is by pressing the Home button/Settings/Preferences/Picture, and if you have options HDR-Vivid or HDR-Video, you have a TV that supports HDR content.

One of the reasons most manufacturers use HDR10 is that it was the first free solution on the market when HDR content emerged.

When it comes to technical details, HDR10 has a 1,000 nit (or candle per square meter) peak brightness target, 10-bit color depth and can display everything in the Rec.2020 color space.

HDR10 uses static metadata, which is considered one of the disadvantages of this format. It sends the information to your device at the beginning of the video, and your device uses that same information the whole time.

Nevertheless, some TVs, such as the Samsung 65″ Class AU8000 Crystal UHD Smart TV, have excellent reviews. It was scored 4.6 stars by 6,859 users on Amazon.

Also Read: Crystal UHD vs QLED: Which Samsung technology is better?

HDR10+

HDR10+ was created in 2017 by 20th Century Fox, Panasonic, and Samsung, as an improved HDR10 format for HDR content.

It’s open-source, just like HDR10, with media’s enhanced color and contrast. But we have to mention that it is free for streaming services but not manufacturers. If the TV manufacturer wants to display a label HDR10+ standard, they need to buy a license.

As you might guess, all 2016 and newer Samsung UHD TVs support HDR10+ and the 2020 Terrace, Sero, Frame, and QLED.

What is the difference between HDR10 and HDR10+?

The innovation was related to sending information to the devices. While HDR10 uses static metadata, the HDR10+ format has dynamic metadata, sending different information for every scene.

Dolby Vision

Dolby Vision was the first available HDR format, and it was introduced back in 2014 by Dolby Laboratories.

It works with up to an 8K resolution, 10,000 nits brightness, and 12-bit color and uses dynamic metadata. Because these specs aren’t exactly available on any consumer electronics, and because it’s a licensed format, its use is not so widely spread as HDR10 or HDR10+.

Despite the license that manufacturers have to pay, one of the best-scored TVs, the LG C1 OLED, supports both HDR10 and Dolby Vision.

In 2020, Dolby Laboratories introduced an update, Dolby Vision IQ, which adapts brightness to ambient light.

It requires built-in sensors to read how much ambient light is present, adjusting the video you watch accordingly. Hardware that supports Dolby Vision IQ is rare, though LG and Panasonic have some TVs with the required sensors.

HLG (Hybrid Log-Gamma)

BBC and NHK presented hybrid Log-Gamma (HLG) in 2015, approximately around the same time as HDR10.

What’s similar is that HLG has a 10-bit color depth and can display everything in the Rec.2020 color space.

The main differences are:

- HLG doesn’t use metadata.

- It is compatible both with SDR and other HDR devices.

- HLG’s highest luminance depends on the display device.

- HLG was developed for broadcast TV and live videos, while HDR10 was mainly focused on internet streaming and movies.

HDR vs SDR – Features Face to Face

Now that we have explained the difference between the two and the variations of available HDR standards, let’s see how their features differ.

| Features | SDR | HDR10 | HDR10+ | Dolby Vision | HLG |

|---|---|---|---|---|---|

| Maximum luminance | 100 cd/m2 | 1,000 cd/m2 | 4,000 cd/m2 | 10,000 cd/m2 | 1,000 cd/m2 |

| Minimum luminance | 0.1 cd/m2 | 0,05 cd/m2 | 0,05 cd/m2 | 0.005 cd/m2 | 0.005 cd/m2 |

| Contrast ratio | 1,200:1 | 20,000:1 | 20,000:1 | 200,000:1 | 200,000:1 |

| Color depth | 8 bits | 10 bits | 10 bits | 12 bits | 10 bits |

| Color shades | 256 shades of primary colors | 1024 shades of primary colors | 1024 shades of primary colors | 1024 shades of primary colors | 1024 shades of primary colors |

| Target color space | Rec.709 | Rec. 2100 | Rec. 2020 | Rec. 2020 | Rec. 2100 |

| Max resolution | 4K | 4K | 8K | 8K | 4K |

| Metadata | Static | Static | Dynamic | Dynamic | N/A |

| Reference Standards | N/A | ST2084 EOTF | ST2084 EOTF | ST2084 EOTF | Hybrid Log-gamma(HLG) EOTF |

| Primal use | N/A | Streaming or playback | Streaming or playback | Streaming or playback | Broadcasting,live video |

Brightness

While SDR provides maximum luminance of 100 cd/m2 and a minimum of 0.1 cd/m2, HDR standards go way beyond that, providing 4,000 cd/m2 maximum luminance and 0.005 cd/m2 (Dolby Vision, for example).

Color gamut

Now, the color gamut is the subset of colors that can be accurately represented in a given circumstance.

SDR can produce Rec.709 color gamut, while HDR provides Rec. 2020, all except HLG, with Rec. 2100 color gamut.

What does it mean? The HDR TV will probably have more saturated colors than SDR. They will look more vivid and much more substantial.

Color depth

Color depth represents the number of bits of information used to tell a pixel which color to display.

And as you might guess, SDR again loses the battle with just 8 bits, compared to HDR’s 10 and 12 bits.

Dynamic range

Now, we all know that contrast plays an important part in visual experience. The dynamic range represents the colors and details that can be displayed between the darkest black and the brightest white.

This contrast is narrowed with SDR, while HDR broadens the dynamic range.

Standout Features

When combined with a TV with high technical performances, HDR can provide an excellent visual experience.

Price

Though SDR lost every battle when it comes to colors, it seems that it still can offer a lot to the average consumer. And the price is the field where SDR TVs win undoubtedly, regardless of the size of the screen.

On Amazon, for example, you can find a 24-inch Insignia Fire TV with excellent reviews for $119.99, while a 4K Insignia Fire TV 43 inches cost $249.99.

On the other hand, the prices of HDR TVs are higher, and the price ranges from $579.99 for a 50-inch 4K Samsung UN50TU7000 Ultra HD Smart LED TV (2020) to $2,000 or $3,000 and even more. Keep in mind that these prices are subject to change, but were accurate at this post’s publication.

Conclusion

We hope we answered the question of which is best, SDR vs HDR! If you don’t pay so much attention to the colors and the details in the shadows, you might be satisfied with SDR TVs.

On the other hand, if you have an eye for details and want an exceptional visual experience while watching TV, you might want to go for the HDR solution.

Whatever TV you choose, don’t forget to check its dynamic range, compatibility with HDR, resolution, refresh rate, how many HDMI ports it has, and other technical performances.

And if you enjoy watching BBC documentaries, you might want to check whether your TV supports HLG.

Related Read: 4K vs 8K: Can you really tell the difference?